Descriptions

What is Provided:

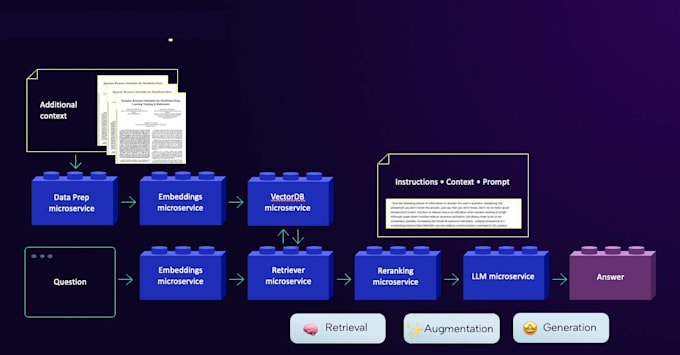

A completely set up RAG AI Infrastructure (Retriever + Large Language Model + Vector Store + API layer)

Deployment across AWS, Google Cloud (GCP), or Microsoft Azure

Infrastructure management using Kubernetes (EKS, GKE, or AKS)

Integration with technologies such as LangChain, LLamaIndex, Pinecone, Weaviate, FAISS, or the preferred vector database

Continuous Integration/Continuous Deployment pipelines for scalable, repeatable deployments

Optional additions: API Gateway, Authentication, Monitoring, and Logging configuration

️

Applications:

- AI-driven internal knowledge repositories

- Conversational agents capable of interpreting documentation

- Semantic search capabilities for organizational data

- Research and Development environments for testing

- Production-ready AI platforms with complete MLOps implementation

Technology Components (Customization is possible):

- Large Language Model: OpenAI, Anthropic, Hugging Face models, and others.

- Vector Database: FAISS, Pinecone, Chroma, Weaviate, and others.

- LangChain / LLamaIndex / RAG Stack

- Kubernetes: EKS / GKE / AKS

- Terraform / Helm / ArgoCD / GitOps (upon request)

Why Select This Service?

I am a DevOps and AI Engineer with practical experience in establishing cloud-native, scalable, and cost-effective RAG architectures for new and established businesses. I collaborate closely to provide customized, secure, and sustainable solutions.

Skills

Packages

| Packages |

Basic

2,900€ |

Standard

6,800€ |

Premium

10,000€ |

|---|---|---|---|

| Delivery Time | 3 Days day | 2 Days day | 4 Days day |

| Number of Revisions | 1 | 3 | unlimited |

You can add services add-ons on the next page.