Descriptions

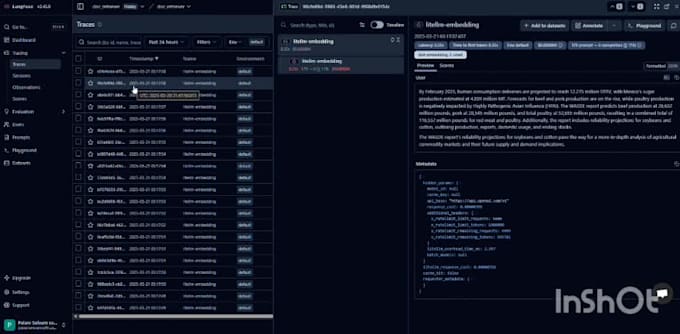

I offer assistance in developing a scalable, production-ready backend utilizing large language models (LLMs) and vector search, encompassing integration with platforms like OpenAI, creation of RAG pipelines using tools such as LangChain, and connection to vector databases including Milvus, Pinecone, or Qdrant. My expertise lies in constructing APIs with frameworks like FastAPI or Flask, incorporating LLMs (OpenAI, Ollama, custom), establishing embedding pipelines, and linking vector stores for semantic search; I also handle graph databases (Neo4j), metadata management, and complete API delivery. The service provides backend logic with LangChain/RAG, setup of vector databases and search APIs, authentication and session management (JWT), and optional deployment support (Docker, AWS, Runpod); the resulting code is clean, modular, and well-documented, suitable for applications such as AI assistants, document chatbots, or retrieval-based systems. If you are uncertain about the best approach for your needs, please contact me for a quick response.

Packages

| Packages |

Basic

50€ |

Standard

120€ |

Premium

250€ |

|---|---|---|---|

| Delivery Time | 3 Days day | 15 Days day | 17 Days day |

| Number of Revisions | 1 | 3 | unlimited |

| Functional custom GPT app | _ | _ | |

| Functional custom GPT app, Conversation starters, Data enrichment, Custom actions | _ | _ | |

| Functional custom GPT app, Conversation starters, Data enrichment, Web browsing capability, Code interpreter capability, Custom actions | _ | _ |

You can add services add-ons on the next page.